Contact Information

Electronic Design Automation, Embedded Systems Security

harris@ics.uci.edu

(949) 824-8842

https://ics.uci.edu/~harris/contact.html

Professor Harris’s Research

Research Areas

- Electronic Design Automation from Natural Language

- Embedded Systems Security

- Social Engineering Attack Detection

- Functional Verification

Professor Harris’ research interests include the design of secure hardware/software systems, and the application of Natural Language Understanding to security and design. Current projects include the detection of phone-based social engineering attacks, formalization of natural language specifications, and the development of a cyber test range to evaluate the security of IoT systems. Professor Harris serves as Associate Editor for ACM Journal on Emerging Technologies in Computing Systems and IEEE Transactions on Dependable and Secure Computing. Professor Harris Also serves on the technical program committees of several conferences including the IEEE/ACM Design Automation Conference.

Selected Projects

Hardware/Software Covalidation

Key Researcher: Kiran Ramineni, Shireesh Verma

A hardware/software system can be defined as one in which hardware and software must be designed together, and must interact to properly implement system functionality. By using hardware and software together, it is possible to satisfy varied design constraints which could not be met using either technology separately. The widespread use of these systems in cost-critical and life-critical applications motivates the need for a systematic approach to verify functionality. Several obstacles to the verification of hardware/software systems make this a challenging problem, necessitating a major research effort. Hardware verification complexity alone has increased to the point that it dominates the cost of design. In order to manage the complexity of the problem, many researchers are investigating covalidation techniques, in which functionality is verified by simulating (or emulating) a system description with a given test input sequence. In contrast, formal verification techniques have been explored which verify functionality by using formal techniques (i.e. model checking, equivalence checking, automatic theorem proving) to precisely evaluate properties of the design. The tractability of covalidation makes it the only practical solution for many real designs.

Covalidation involves three major steps, test generation, cosimulation, and test response evaluation. A key component of test generation is the covalidation fault model which abstractly describes the expected faulty behaviors. We are investigating all aspects of the covalidation problem with the goal of developing an automatic test generation tool which is demonstrated to ensure the detection of the majority of likely design errors.

Reference: I. G. Harris, “Fault Models and Test Generation for Hardware-Software Covalidation,” IEEE Design and Test of Computers, Vol. 20, No. 4, July-August 2003.Testing Concurrent Software

Key Researcher: Patricia Lee

Concurrent programming is central to enabling the potential benefits of distributed and parallel computing. Concurrent programs are inherently more difficult to test and debug than their sequential counterparts system control flow spans across many parallel threads of execution. The step-by-step behavior of a concurrent program is nondeterministic because it depends on several external factors including the operating system’s scheduling algorithm. Nondeterministic behavior is not reproducible and is therefore more difficult to debug. The manifestation of a bug is dependent on the nondeterminstic execution sequence, so a bug may not reveal itself until the system has been deployed.

Concurrent programming requires the use of synchronization and communication constructs (i.e. send, receive) to manage inter-process collaboration and contention. Incorrect use of these synchronization constructs can create bugs which are difficult to reproduce and trace. We are developing coverage metrics to indicate the effectiveness of a test sequence for detecting synchronization errors. We are also developing automatic test generation techniques to ensure the detection of synchronization errors in arbitrary concurrent programs. Reference: I. G. Harris, “On the Detection of Synchronization Errors,” TR 04-13, May, 2004.Test and Diagnosis of FPGAs

Over the past decade field programmable gate arrays (FPGAs) have become invaluable components in many facets of digital design. The programmable nature of FPGA functionality enable the flexibility of software with the performance of hardware. As a result of increased integration, FPGA devices are now used across a wide assortment of fault tolerant and mission critical digital platforms. This application-level diversity has necessitated increased interest in FPGA test so that faulty components can be quickly identified and recovered. Given the large range of applications and programmable configurations each FPGA device may support, FPGA test can be substantially more complex than ASIC test, providing motivation for new, efficient testing techniques. Information regarding defect location is particularly important in todayt environment since new techniques have been developed that can reconfigure FPGAs to avoid faults. To operate effectively, these approaches require that the specific location of the fault be clearly identified.

We are developing built-in self-test (BIST) techniques which can be applied to FPGA testing with no permanent overhead. We are interested in on-line testing techniques which can be used during the normal functional operation of the system.

Reference: D. A. Fernandes and I. G. Harris, “Application of Built in Self-Test for Interconnect Testing of FPGAs,” IEEE International Test Conference, September 2003Current Projects

Hardware Assisted Host-based Intrusion Detection

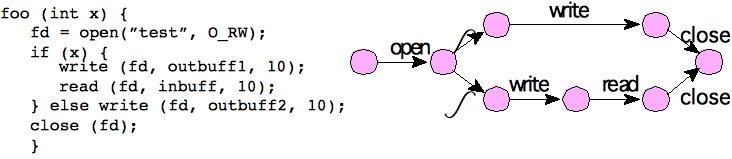

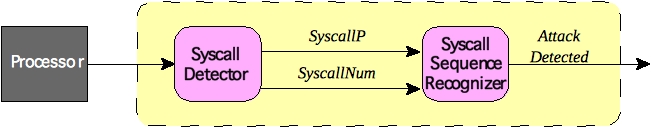

We are investigating a technique to implement host-based intrusion detection (HIDS) in hardware so that attacks can be detected as soon as their behavior deviates from correct system behavior. Our system is anomaly-based; a model of the correct system behavior is generated at compile-time and any deviations from the correct behavior must indicate an attack. We characterize correct system behavior as a finite state machine which accepts all legal system call sequences.

The execution of system calls is detected in hardware (Syscall Detector) by examining the instruction at each clock cycle, and the contents of specific internal registers. The legal system call sequences are captured in as a finite state machine which is implemented in hardware (Syscall Sequence Recognizer).

In this way, the execution of an illegal call sequence can be detected a single clock cycle after it occurs.

Directed-Random Security Testing of Network Applications

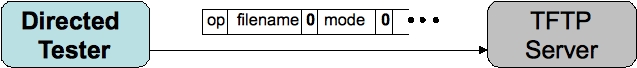

We propose a new directed-random fuzzing system which applies static analysis of the target source code to generate fuzzing constraints to rapidly expose vulnerabilities. Constraints are identified which will increase the execution frequency of potential vulnerabilities.

Networked applications, which receive network messages as input and respond to those messages, are the most common source of software security vulnerabilities because they are directly exposed to attack via the internet. Networked applications have the property that a large part of their code execution depends directly on the values of fields of the network messages received as input. For example, the behavior of an HTTP server will depend on the request method and header fields, and a TFTP server will depend on the opcode and mode fields. We analyze the source code of the networked application to identify these dependencies and use them to constrain test generation.

Specification-based Hardware Verification

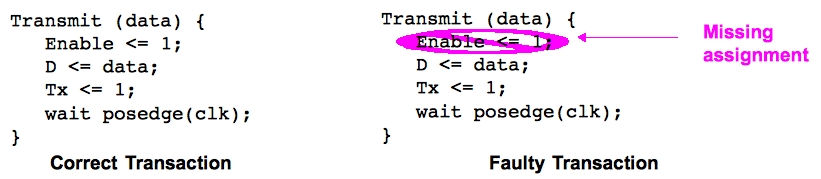

Misunderstanding the specification is a significant source of design errors. Detection of these errors requires that tests be generated directly from the specification, in order to identify differences between the specification and the implementation. Transaction Level Models (TLMs) are used to abstractly describe system behavior as a set of functions which encapsulate details of function and communication. TLMs are the most abstract formal description of the specification which we use to generate specification-based test sequences.

Transactions describe sequences of input events which trigger a behavior in the correct system. The behavior of a design with a specification-based error would match that of a mutated transaction. We generate tests by mutating existing transactions to create tests which will differentiate the behavior of correct and erroneous designs.